AI Training Resource Hub : Government policy, research papers, AI tools, and useful links to support AI literacy and cybersecurity awareness across the Northern Territory.

How AI is Reshaping College for Students and Professors

PBS NewsHour report on the first senior class to spend nearly their entire college career in the age of generative AI. Explores how AI is shaking academia to its core as the technology becomes harder to distinguish from human work.

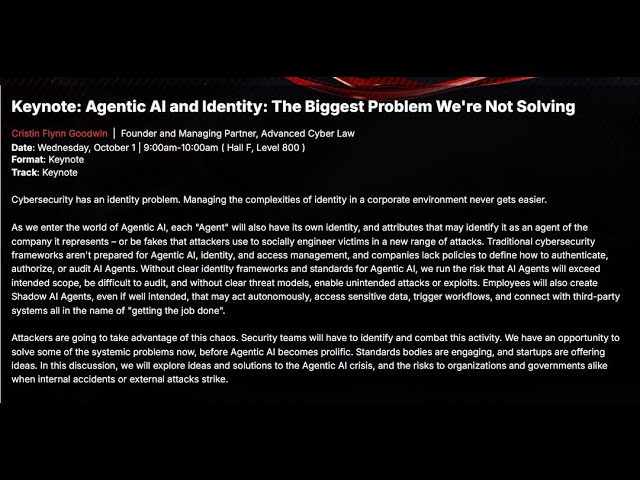

Keynote: Agentic AI and Identity - The Biggest Problem We're Not Solving

Cybersecurity has an identity problem. This keynote addresses the complexities of managing identity in corporate environments as agentic AI systems introduce new attack vectors and authentication challenges.

Your Hot Takes on A.I. & Education

New York Times Hard Fork episode featuring student perspectives on AI in education. Students describe using AI as a personalised learning tool while others raise concerns about plagiarism detection issues and equity.

Educating Kids in the Age of A.I. | Rebecca Winthrop

Rebecca Winthrop discusses how AI is forcing a re-evaluation of education's purpose. Explores declining student engagement with deep reading and the risk of AI enabling "passenger mode" learning.

AI can do your homework. Now what?

Vox explores educators' dilemma between banning AI or teaching responsible use. Highlights the concept of "desirable difficulties" - effortless AI experiences may create the illusion of learning without actual cognitive development.

How AI is being used in our schools | 7.30

ABC's 7.30 examines AI integration in Australian schools, from full adoption to initial bans. Features EduChat, a curriculum-specific AI tool designed to enhance literacy and critical thinking.

What Everyone Gets Wrong About AI and Learning | Veritasium

Derek Muller examines past tech failures in education to caution against AI overhype. Argues effective learning requires effortful practice engaging "System 2" thinking, and AI's main risk is enabling students to bypass essential cognitive work.

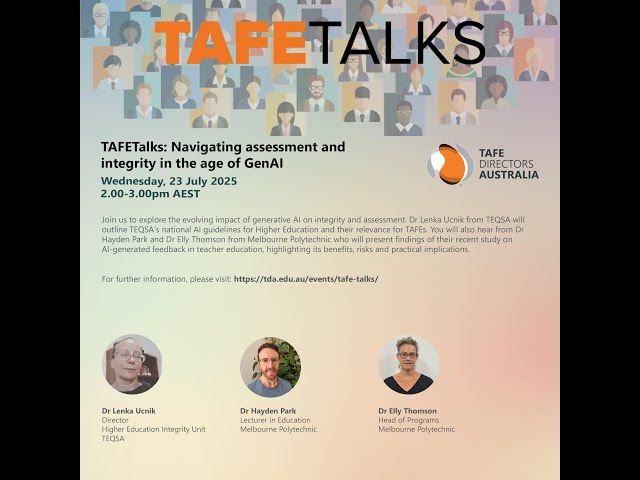

TAFETalks: Navigating Assessment and Integrity in the Age of GenAI

TEXA and Melbourne Polytechnic experts discuss GenAI's impact on tertiary education assessment and integrity. Study finds students prefer human feedback despite AI's detailed responses.

Threat Intelligence: How Anthropic Stops AI Cybercrime

Anthropic's Jacob Klein and Alex Moix discuss detecting and preventing AI cybercrime, including the North Korea fraud scheme and "vibe hacking" techniques.

How I use LLMs | Andrej Karpathy

Andrej Karpathy's practical guide to using LLMs effectively. Covers the expanding ecosystem beyond ChatGPT, choosing appropriate models, managing token windows, and leveraging features like custom instructions.

Australia's AI Ethics Principles

The foundational 8 AI Ethics Principles established in 2019, covering human-centred values, fairness, privacy, transparency, contestability and accountability. Forms the cornerstone for responsible AI in Australia.

Guidance for AI Adoption (October 2025)

Updated voluntary AI Safety Standard streamlined from 10 to 6 essential practices: governance, impact assessment, risk management, transparency, testing/monitoring, and human oversight.

Australian Framework for Generative AI in Schools

National framework approved by Education Ministers in October 2023, covering six guiding principles for responsible AI use in schools. Review endorsed June 2025 confirmed it remains fit for purpose.

TEQSA: Assessment Reform in the Age of AI

Comprehensive TEQSA guidance on evolving risk to academic integrity. Emphasises assessment design, two-lane approaches, and the shift from detection to learning validation.

NT AI Assurance Framework

Northern Territory Government AI governance framework implementing the National Framework for Assurance of AI in Government. Mandatory for all NT agencies using AI components.

Australian Government AI Technical Standard

Technical requirements from the Digital Transformation Agency for AI system development, deployment, and operation across government agencies.

Framework for Governance of Indigenous Data

Landmark NIAA policy co-designed with Aboriginal and Torres Strait Islander partners. Implementation commenced January 2025 with seven-year plans required across government.

Social Media Age Restrictions

eSafety Commissioner guidance on the Online Safety Amendment (Social Media Minimum Age) Act 2024. Age-restricted platforms must prevent under-16s from having accounts from 10 December 2025.

My Face, My Rights Bill 2025

Proposed crossbench legislation to criminalise non-consensual deepfakes with penalties up to 7 years imprisonment. Includes carve-outs for journalism, satire, and good faith law enforcement.

EU AI Act (Regulation 2024/1689)

The world's first comprehensive legal framework for AI. Prohibited practices came into force February 2025, including subliminal manipulation, social scoring, and untargeted facial recognition scraping.

OpenAI ChatGPT

Leading conversational AI platform with educational features including Study Mode. Free tier available with GPT-4o mini; Plus subscription provides access to GPT-4o and o1 reasoning models.

Anthropic Claude

Advanced AI assistant with constitutional AI safety focus and Learning Mode. Current flagship is Claude 3.5 Sonnet with exceptional performance across text generation, coding, and complex reasoning.

Google Gemini

Google's AI platform integrated with Workspace for Education, offering Guided Learning Mode. Deep integration with Google services including Search, Docs, and Gmail.

Perplexity AI

Citation-first AI search engine designed to address hallucination by retrieving actual sources before generating responses. Excellent for research tasks requiring verifiable information.

xAI Grok

AI assistant integrated with X (formerly Twitter) platform. Known for real-time information access and less restrictive content policies compared to competitors.

Hugging Face Model Hub

The "GitHub for AI models" - central hub for open source AI with thousands of pre-trained models, datasets, and tools. Essential resource for local AI deployment.

Ollama

Command-line tool for running AI models locally. Handles model management, quantisation, and provides local API endpoints. Excellent for getting started with private AI deployment.

LM Studio

Graphical application for discovering, downloading, and running local AI models. Beginner-friendly interface with model search and performance monitoring.

Transformer Explainer

Interactive visualisation tool running a live GPT-2 model in your browser. Experiment with text and observe how internal components work together to predict next tokens.

Transformer Neural Net 3D Visualiser

Interactive 3D visualisation showing how text flows through a transformer neural network. Watch tokens move through layers and observe attention mechanisms.

Turnitin AI Detection

AI writing detection capabilities integrated with academic integrity platforms. Important caveat: Turnitin states the indicator "should not be used as the sole basis for adverse actions."

Attention Is All You Need (Vaswani et al., 2017)

The foundational paper introducing the Transformer architecture that underlies modern LLMs including GPT, Claude, and Gemini. Essential reading for understanding how AI language models work.

Language Models are Few-Shot Learners (Brown et al., 2020)

The GPT-3 paper demonstrating that large language models can perform tasks with minimal examples. Introduced the concept of "in-context learning" that defines modern AI interactions.

Towards Best Practices for Open Datasets for LLM Training (Baack et al., 2025)

Outlines the critical role of open, well-governed datasets in building transparent and trustworthy AI systems. Essential reading for understanding training data governance.

Weber-Wulff et al. AI Detection Study

Peer-reviewed international study examining fourteen AI detection systems. Concluded none achieved reliable accuracy across tasks, with paraphrased and hybrid text particularly problematic.

Anthropic Threat Intelligence Reports

Regular reports documenting real-world AI misuse patterns including the August 2025 report on agentic AI weaponisation, North Korean employment fraud, and ransomware development.

Chatbot Arena Leaderboard

Crowdsourced AI benchmark using blind comparisons. Users vote on which response is better, creating Elo ratings that reflect real-world preference rather than synthetic benchmarks.

Artificial Analysis

Independent benchmarking platform comparing AI model performance, pricing, and speed. Useful for making informed decisions about which AI tool to use for specific tasks.

Common Crawl

Non-profit organisation providing free web crawl data used in LLM training. Understanding Common Crawl helps explain both the capabilities and limitations of AI systems.

The Stack Dataset

Large collection of permissively licensed source code used for training code generation models. Important for understanding how AI coding assistants are developed.

Content Authenticity Initiative

Coalition led by Adobe establishing open standards for content provenance. Addresses deepfake concerns through cryptographic verification of media origin and editing history.

C2PA Coalition

Coalition for Content Provenance and Authenticity developing technical standards for certifying digital content origin. Essential for understanding future content verification approaches.

WildChat Visualiser

Interactive tool for exploring how people actually use AI chatbots in the wild. Valuable for understanding real-world AI usage patterns in educational contexts.